Shape Guided 3D

Overviews

A Shape guided expert learning framework is presented to tackle the problem of unsupervised 3D anomaly detection.

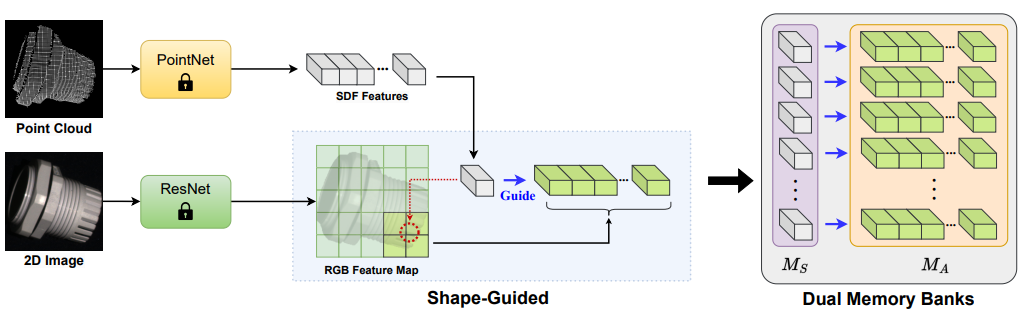

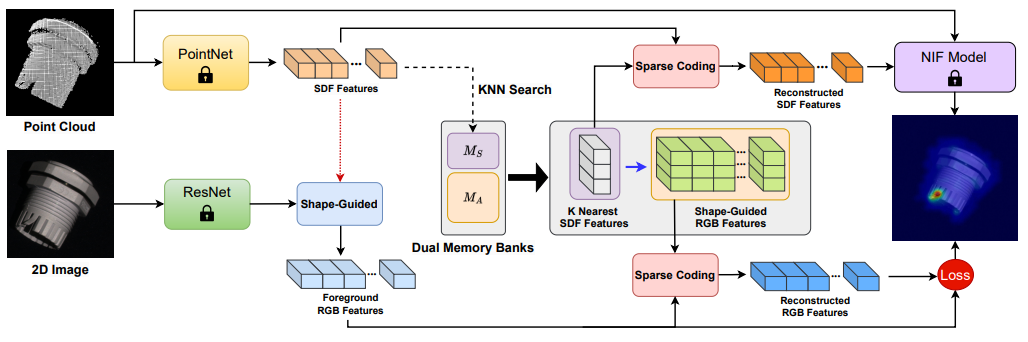

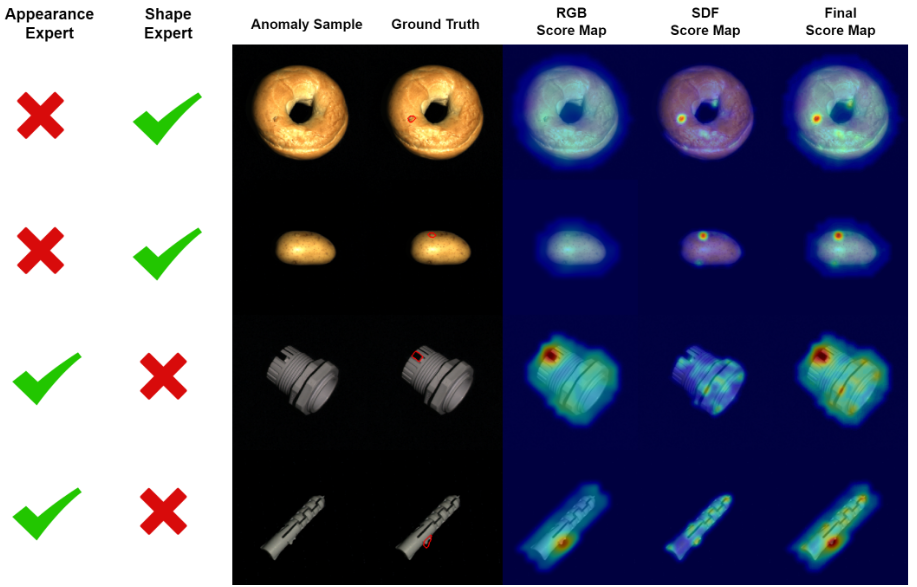

Method is established on the effectiveness of two specialized expert models and their synergy to localize anomalous regions from color and shape modalities. First model (Shape expert) utilizes geometric information to probe 3D structural anomalies. Second model (Apperance Expert) considers 2D RGB features associated with first model to identify color abnormalities.

Original git: https://github.com/jayliu0313/Shape-Guided.git

Principles

Since NIF is universal to all 3D patches, we only need to store their respective feature vector f into SDF memory bank (Ms). Each patch correspond to a region in the RGB feature map and results in an SDF-specific dictionary. All such shape-guided RGB dictionaries are saved to form the RGB memory bank (MA)

- Use PointNet to get all patch-level SDFs {f} of sample x.

- Use ResNet to get the RGB feature map. Those pixels that are associated with at least one SDF are considered foreground in the 2D RGB image.

- For each SDF in {f}, find nearest neighbors in Ms to form the respective dictionary and obtain its approximation f via sparse representation.

- For each patch of sample, use patchwise recontructed f to compute the signed distances

- Adopt the absolute values of signed distance from all the patches of sample to form the final SDF score map.

- For all the relevant SDFs in Ms that are used in computing sparse representations of step 3, take the union of all their asociated RGB dictionaries in MA and form a shape-guided RGB dictionary, denote as D.

- For each foreground RGB feature vector from step 2, find k nearest neighbors from D and obtain its sparse representation. The distance

l2form the final RGB score map. - Perform score-map alignment and pixelwise take the maximum of the SDF and RGB responses as the corresponding anomaly score.

Result

Usage

1. Prepare your dataset

# your dataset structure should be like this

data/

-train/

-good/

-rgb/

-*.png

-xyz/

-*.tiff

-npz/

-*.npz

-pretrain/

-*.npz

2. Preprocessing

Remove the backgoround of the point cloud.

from ecos_core.shape_guided3D.utils.preprocessing import preprocess_pc

preprocess_pc(path)

With:

path: is tiff path

Divided the point cloud into multiple local patches for each instance.

from ecos_core.shape_guided3D.utils.cut_patches import get_data_loader

get_data_loader('train', cls, datasets_path, save_grid_path, group_mul, group_size, sample_num)

Cut the pathes for pretraining data

from ecos_core.shape_guided3D.utils.cut_patches import get_data_loader

get_data_loader('pretrain', cls, datasets_path, save_grid_path, group_mul, group_size, sample_num)

With:

cls: category in datasetdatasets_path: Path of preprocessed datasetsave_grid_path: Path of patches.group_mul: Number of patches = (group_mul * points) / point_numgroup_size: Number of the points of each patchsample_num: Random sample nosie points for pretraining

3. Train

from argparse import ArgumentParser

from ecos_core.shape_guided3D.shape_guide3D import Model

parser = ArgumentParser()

opts = parser.parse_args()

with open(opt_path, 'r') as f:

opts.__dict__ = json.load(f)

model = Model(opts)

model.fit()

With content of opt.json like this:

{

"image_size": 224,

"sampled_size": 20,

"point_num": 500,

"group_mul": 5,

"batch_size": 32,

"datasets_path": "./data/train",

"grid_path": "./data/train",

"pretrain_path": "./data/train/good/pretrain",

"method_name": ["RGB", "SDF", "RGB_SDF"],

"epoch": 1,

"k_number":10,

"learning_rate": 0.0001,

"epoch_per_save": 0,

"output_path": "./result"

}

datasets_path: should be training dataset, i.e./data/train/like the structuregrid_path: should be grid path after preprocessing, i.e./data/train/like the structure.pretrain_path: should be grid path after preprocessing with--pretrain, i.e./data/train/good/pretrainlike the structure.

4.Predict

from argparse import ArgumentParser

from ecos_core.shape_guided3D.shape_guide3D import Model

parser = ArgumentParser()

opts = parser.parse_args()

with open(opt_path, 'r') as f:

opts.__dict__ = json.load(f)

model = Model(opts)

model.build_model(custom_weight)

pred = model.predict(img, tiff_path)

model.process_predictions(pred, img, '', '', output_path)

With:

tiff_pathis path to tiff file has been preprocessing.imgis path to rgb image file.output_pathis path to save output image.

APIs

Model

Bases: BaseModel

This is the base model for Shape Guide

__init__(opt=None)

This function is called when creating a new instance of Shape Guide Model

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

opt |

NameSpace

|

The default options neccessary for initializing the model. opt should includes these attributes: weight. |

None

|

build_model(custom_weight)

build model for detection

Returns:

| Name | Type | Description |

|---|---|---|

Model |

Inherited from model base class |

get_transform()

Get image data transform function for preprocessing

Returns:

| Name | Type | Description |

|---|---|---|

transform |

func

|

transform function. This function will take input as image path and output - raw_image: image numpy mat - image_transform: image tensor (pytorch) |

predict(image_path, tiff_path)

Predict image

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

image_path |

str

|

path to rgb image |

required |

tiff_path |

str

|

path to tiff file (point cloud) |

required |

Returns:

| Name | Type | Description |

|---|---|---|

pred |

Tuple

|

Tuple of image score and anomaly map |

process_predictions(pred, raw_image_path, raw_image_mat, image_transform, save_image_path)

post process the output of the net

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

pred |

_type_

|

output of detection |

required |

raw_image_path |

str

|

raw image path |

required |

save_model(write_memory=False)

Save model after train and build memory

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

write_memory |

bool

|

If True, write memory bank into weight. Defaults to False. |

False

|