LOCA

Overviews

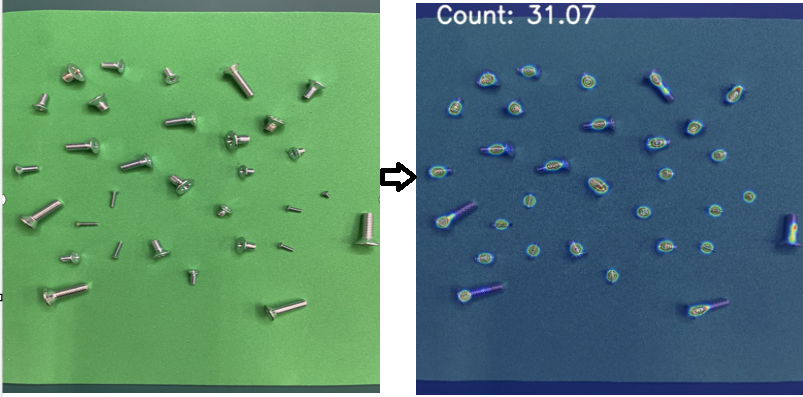

LOCA is A Low-Shot Object Counting Network With Iterative Prototype Adaptation, which has the new object prototype extraction module, which iteratively fuses the exemplar shape and appearance information with image features.

Original git: https://github.com/djukicn/loca

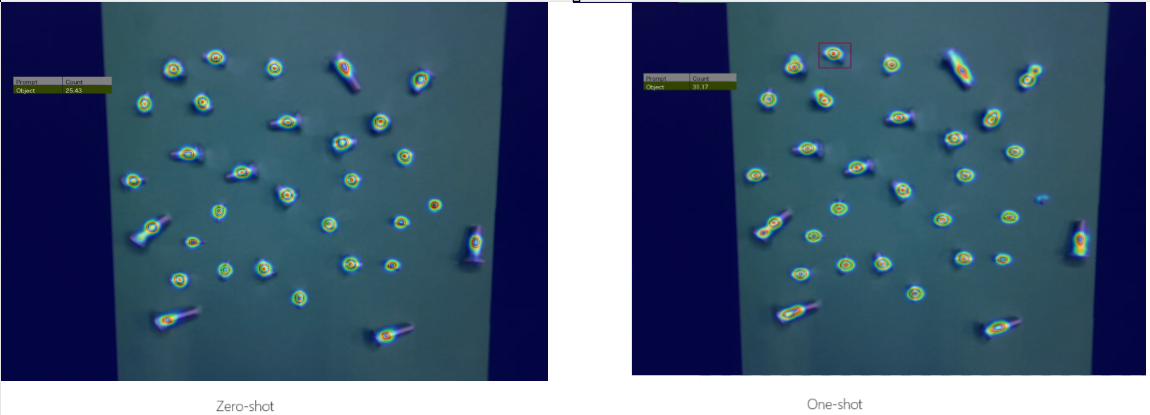

Zero-shot learning is a machine learning algorithm in which a pre-trained deep learning model trained on a set of seen classes is then generalized to a set of unseen classes without any further training. This enables the model to predict new classes not seen before without requiring direct examples from that class.

One-shot learning is a machine learning algorithm that allows a model to learn how classify objects with very little data (ussualy one example). Unlike typical machine learning models that require large amounts of data for training and object classification, one-shot learning helps identify similarities between objects with very small amounts of data.

In LOCA, when using one-shot learning, detection ability will be improved, because the model has more information for detect.

Download pretrained models

Usage:

Initialize model

In the method build_model we can use it in the following ways:

import os

import sys

from pathlib import Path

FILE = Path(__file__).resolve()

FILE_DIR = os.path.join(os.path.dirname(__file__))

ROOT = FILE.parents[0]

if str(ROOT) not in sys.path:

sys.path.append(str(ROOT))

sys.path.insert(-1, os.path.join(os.path.dirname(__file__), "..", ".."))

from easydict import EasyDict

from ecos_core.loca.loca import Model

with open(opt_path, 'r') as f:

opts = EasyDict(json.load(f))

model_instance = Model(opts)

- Use custom weight:

# Build model with custom weight

model = model_instance.build_model(custom_weight=<PATH_TO_WEIGHT>)

- Build model with default options (weight will be downloaded and saved into ecos_core)

model = model_instance.build_model()

- Build model with default options (weight will be downloaded and saved according to the deploy model)

# override weight path

self.opt.weights = os.path.join(FILE_DIR, self.opt.weights)

# build model

model = model_instance.build_model()

Predict

Predict to get density maps:

output = model.predict(input_path)

Predict and count objects:

model.process_predictions("", input_path, "", "", output_path)

Configs

Example of opt.json:

{

"zero_shot": true,

"image_size": 512,

"num_heads": 8,

"weights": "./weights/loca_zero_shot.pt",

"weights_url":"https://ecos-ai-test-upload.s3.ap-northeast-1.amazonaws.com/models/LOCA/loca_zero_shot.pt",

"bounding_boxes": [],

"show_labels": true,

"type": "pytorch",

"type_shot": "zero-shot"

}

With:

weights: path to weights. If you usezero-shot, you should specify theloca_zero_shot.pt, else use theloca_few_shot.ptweights_url: URL download weightzero_shot: True if you want to use zero-shotimage_size: image sizenum_heads: is a number of attention head in modelsbounding_boxes: bounding boxes of each shot, it must be 2D array. Example if you using one-shot: [[10, 10, 20, 20]], and make sure you set"zero_shot": falseshow_labels: show labels if truetype_shot: number of shot when release model to Applications. Example: "one-shot"

APIs

Model

Bases: BaseModel

LOCA model is a model that uses A Low-Shot Object Counting Network to count objects

build_model(custom_weight=None)

Build model in LOCA model

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

custom_weight |

str

|

Custom weight. Defaults to None. |

None

|

Returns:

| Name | Type | Description |

|---|---|---|

model |

LOCA model |

get_transform()

Transform input by ImageNet normalization.

Returns:

| Name | Type | Description |

|---|---|---|

transform |

func

|

transform function. This function will take input as image path and output - raw_image: image numpy mat - image_transform: image tensor (pytorch) Example: torchvision.transforms.transforms: data transforms function |

predict(image_path)

Predict a given image path

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

image_path |

str

|

Location of image |

required |

Returns:

| Type | Description |

|---|---|

|

torch.Tensor: Density map |

process_predictions(net_output, raw_image_path, raw_image_mat, image_transform, save_image_path)

post process the output of the net

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

net_output |

_type_

|

output of detection net |

required |

raw_image_path |

str

|

raw image path |

required |

Returns:

| Name | Type | Description |

|---|---|---|

save_image_path |

str

|

save image path |

reload_param(opt_path='./opt.json')

reload parameters

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

opt_path |

str

|

Opt path. Defaults to "./opt.json". |

'./opt.json'

|